In the last part of this 3 part series, we’re going to complete the project by separating the camera logic and the UI with a network interface in between. This approach allows for very seamless interaction between the camera as a server and the UI as a client, whilst keeping everything as signal driven.

For parts 1 and 2, we had a single Python file which contained all the code, but with the move to a network-based approach. It makes sense to split it up into two separate scripts, having one for the server (the camera) and another one for the client (the GUI). There is some overlap between the two so introducing a new consts python file would provide a common base between them for enums and reading & writing the messages packets.

The consts.py file is the ideal place to move some of the enums that we’ve already defined and will start as a great base for the file.

class PiCameraStatus(object):

"""

The Status that the PiCamera can be in

"""

RECORDING = "Recording"

STANDING_BY = "Standing By"

NOT_CONNECTED = "Not Connected"

class CaptureInfo(object):

"""

Combos for CaptureInfo such as frame rate and resolutions

"""

VIDEO_1080p_30 = (1920, 1080, 30)

VIDEO_720p_60 = (1280, 720, 60)

VIDEO_640p_90 = (640, 480, 90)

Let’s extend that with a NetworkCommands enum so that when we’re passing messages back and forth from the client to the server, we know what they are for.

class NetworkCommands(object):

"""

Enums for Network commands

"""

PORT = 9898

# Commands

START_RECORDING = 0

STOP_RECORDING = 1

SAVE_RECORDING = 2

SET_BRIGHTNESS = 3

SET_CONSTRAST = 4

SET_ANNOTATE_TEXT = 5

SET_CAMERA_INFO = 6

SET_ROTATION = 7

CLOSE_CAMERA = 8

# Signals

SIGNAL_NEW_PREVIEW_FRAME = 20

SIGNAL_CAMERA_ERROR = 21

SIGNAL_CAMERA_STATUS_CHANGED = 22

SIGNAL_CAMERA_BRIGHTNESS_CHANGED = 23

SIGNAL_CAMERA_CONSTRAST_CHANGED = 24

SIGNAL_RECORDING_STARTED = 25

SIGNAL_RECORDING_STOPPED = 26

SIGNAL_RECORDING_SAVED_START_PROCESSING = 27

SIGNAL_RECORDING_SAVE_FINISHED_PROCESSING = 28

SIGNAL_RECORDING_SAVE_ERROR_PROCESSING = 29

The list of network commands is split between methods that might get called to set parameters on the camera (such as the brightness, rotation, and starting recording) and those which are events and would emit signals as a result (new preview frame, camera contrast changed)

Within the consts.py file, we’re also going to make a parser class, which can take a message, which consists of one of the NetworkCommands and a list of args to pass over the wire.

I’ve opted to allow two different types of data types in the NetworkParser, one is standard python types, which would be pickled when serialized, and the other is a QByteArray. The reason I made this choice was due to wanting to be able to send text and numbers across simply, but also allows for an efficient transfer for the preview frame. As the preview frame is read into memory on the camera side as a QImage, the data can be written out to a QByteArray on the C++ side of PySide which is very efficient, rather than converting it to a string, which would then be pickled.

The messages that are sent will also include how big the message is, which will let us know if we have the whole message and can start to parse it, or if there is still more data to receive to decode the message.

Messages are written like this:

Unsigned 32 bit int – Packet Size

Unsigned 8 bit int – NetworkCommand

Unsigned 8 bit int – Number of args in the packet

Unsigned 8 bit int – the type of arg #

If the arg is a byte array:

Unsigned 32 bit int – Size of the array

Data

If the arg is a pickle string:

Data

class NetworkParser(object):

"""

Class to deal with the encoding and decoding messages between the client and server

"""

_BYTE_ARRAY = 0

_PICKLE = 1

@classmethod

def encodeMessage(cls, commandIndex, listOfArgs):

"""

Encode a message given the command index and the arguments into a QByteArray

args:

commandIndex (NetworkCommands): The command index of the message, what its for

listOfArgs (list of objects): List of arguments that will be serialised and passed over

returns:

QByteArray of the constructed messages

"""

byteArray = QtCore.QByteArray()

writer = QtCore.QDataStream(byteArray, QtCore.QIODevice.WriteOnly)

writer.writeUInt8(commandIndex)

writer.writeUInt8(len(listOfArgs))

for item in listOfArgs:

if isinstance(item, QtCore.QByteArray):

writer.writeUInt8(cls._BYTE_ARRAY)

arraySize = item.size()

writer.writeUInt32(arraySize)

writer.device().write(item)

else:

writer.writeUInt8(cls._PICKLE)

writer.writeQString(pickle.dumps(item))

# Size of the whole thing

finalArray = QtCore.QByteArray()

writer = QtCore.QDataStream(finalArray, QtCore.QIODevice.WriteOnly)

writer.writeUInt32(byteArray.length())

finalArray.append(byteArray)

return finalArray

@classmethod

def decodeMessage(cls, currentData):

"""

Decodee a byte array message into its command index and argument

args:

currentData (QByteArray): The data to try and read

returns:

None if the message is incomplete, or a tuple of int (command index) and list of objects

as the args

"""

reader = QtCore.QDataStream(currentData, QtCore.QIODevice.ReadOnly)

dataSize = reader.readUInt32()

# Check to see if we have enough data

if currentData.length() < dataSize + 4:

return None

# Read the command Index and number of args

commandIndex = reader.readUInt8()

numberOfArgs = reader.readUInt8()

args = []

for _ in xrange(numberOfArgs):

argType = reader.readUInt8()

if argType == cls._BYTE_ARRAY:

arraySize = reader.readUInt32()

newArray = reader.device().read(arraySize)

args.append(newArray)

elif argType == cls._PICKLE:

pickleStr = reader.readQString()

args.append(pickle.loads(pickleStr))

else:

print "Unable to understand variable type enum '{}'".format(argType)

# Remove what we've read from the current data

currentData.remove(0, dataSize + 4)

return (commandIndex, args,)

With the consts complete, we’re going to make a move the Camera wrapper and Preview Thread code out of the part 2 python file and into the remoteCaptureServer.py. The LivePreviewThread will stay mostly the same, but with one small change. When we get the image string data, we need to reduce the size of it so it’s more efficient on the network. We can do this by using the .scaled method on the QImage

newImage = QtGui.QImage.fromData(self._camera.getPreveiw()).scaled(400, 400, QtCore.Qt.KeepAspectRatio)

The Camera Wrapper does not need all the Qt signals on it anymore, as they will be emitted through the network interface. The code to set up a TCP server with PySide is very minimalist, only needing to listen for connections and handle when new connections are made. Adding this to the constructor would look like this.

class PiCameraWrapperServer(QtCore.QObject):

"""

A Network based Qt aware wrapper around the Pi's camera API

Server side, deals with the PiCamera

"""

_TEMP_FILE = os.path.join(tempfile.gettempdir(), "piCamera", "temp.h264")

def __init__(self, captureInfo=consts.CaptureInfo.VIDEO_1080p_30):

"""

Constructor

"""

super(PiCameraWrapperServer, self).__init__()

self._tcpServer = QtNetwork.QTcpServer()

self._tcpServer.listen(QtNetwork.QHostAddress.AnyIPv6, consts.NetworkCommands.PORT)

self._connectedClients = []

self._readData = {}

# Setup the camera

self._camera = picamera.PiCamera()

self._setStatus(consts.PiCameraStatus.STANDING_BY)

self.setCameraInfo(captureInfo)

# Remove any old temp data

self._startTime = "00:00:00:00"

if os.path.exists(self._TEMP_FILE):

os.remove(self._TEMP_FILE)

# Setup the preview thread

self._livePreivew = LivePreviewThread(self)

self._livePreivew.newPreviewFrame.connect(self._handleNewPreviewFrame)

self._livePreivew.start()

self._tcpServer.newConnection.connect(self._handleNewConnection)

print "Server set up, waiting for connections..."

When a new connection is made to the server, it will trigger the newConnection event. We’ll need to set up the signal handlers for it when it disconnects as well as when there is data to be read. It’s also a great place to send out the current properties and status on connect.

def _handleNewConnection(self):

"""

Handle a new client connecting to the server

"""

connection = self._tcpServer.nextPendingConnection()

self._readData[connection] = QtCore.QByteArray()

connection.readyRead.connect(functools.partial(self._handleConnectionReadReady, connection))

connection.disconnected.connect(functools.partial(self._handleConnectionDisconnect, connection))

self._connectedClients.append(connection)

# Send Status, Brightness and Contrast

self._sendCommand(consts.NetworkCommands.SIGNAL_CAMERA_STATUS_CHANGED, [self.status()])

self._sendCommand(consts.NetworkCommands.SIGNAL_CAMERA_BRIGHTNESS_CHANGED, [self.brightness()])

self._sendCommand(consts.NetworkCommands.SIGNAL_CAMERA_CONSTRAST_CHANGED, [self.contrast()])

When new data is ready to be read on each connection, it gets read into a connection-specific array and it tries to get decoded using the NetworkParser. If we’re able to decode it, then we use the _parseData method to act on the given data.

def _handleConnectionReadReady(self, connection):

"""

Handle the reading of data from the given connection, where there is data to be read

"""

self._readData[connection].append(connection.readAll())

while True:

result = consts.NetworkParser.decodeMessage(self._readData[connection])

if result is None:

break

self._parseData(result)

def _parseData(self, data):

"""

Parse the messages that came from the client

args:

data (consts.NetworkCommands, list of objects): Tuple of consts.NetworkCommands and list

of args

"""

commandIdx, args = data

if commandIdx == consts.NetworkCommands.START_RECORDING:

self.startRecording()

elif commandIdx == consts.NetworkCommands.STOP_RECORDING:

self.stopRecording()

elif commandIdx == consts.NetworkCommands.SAVE_RECORDING:

self.saveRecording(args[0])

elif commandIdx == consts.NetworkCommands.SET_BRIGHTNESS:

self.setBrightness(args[0])

elif commandIdx == consts.NetworkCommands.SET_CONSTRAST:

self.setContrast(args[0])

elif commandIdx == consts.NetworkCommands.SET_ANNOTATE_TEXT:

text, colour, size, frameNumbers = args

self.setAnnotateText(text, colour, size, frameNumbers)

elif commandIdx == consts.NetworkCommands.SET_CAMERA_INFO:

captureInfo, stopRecording = args

self.setCameraInfo(captureInfo, stopRecording)

elif commandIdx == consts.NetworkCommands.SET_ROTATION:

self.setRotation(args[0])

Lastly, for the server connection work, we need to add a method to generate the messages and send them to the connected clients. Having a simple singular interface for this means that the code to update the clients is very minimal.

def _sendCommand(self, commandIndex, listOfArgs):

"""

Send a given command to the client

args:

commandIndex (consts.NetworkCommands): The command index of the message, what its for

listOfArgs (list of objects): List of arguments that will be serialised and passed over

"""

if len(self._connectedClients) == 0:

return

package = consts.NetworkParser.encodeMessage(commandIndex, listOfArgs)

for connection in self._connectedClients:

connection.write(package)

if connection.waitForBytesWritten(1000) is False:

print "Error sending data to {}".format(connection)

To finish off the Camera server code, we just need to replace the code that was originally emitting signals with code to send them as commands instead. As you can see by the updated

_handleNewPreviewFrame and setBrightness commands, calling the network code is very easy and is not too dissimilar to emitting the signals.

def _handleNewPreviewFrame(self, newFrame):

"""

handle a new preview frame

args:

newFrame (QImage): The new preview frame

"""

byteArray = QtCore.QByteArray()

buff = QtCore.QBuffer(byteArray)

newFrame.save(buff, "PNG")

self._sendCommand(consts.NetworkCommands.SIGNAL_NEW_PREVIEW_FRAME, [byteArray])

def setBrightness(self, value):

"""

Set the camera's brightness

args:

value (int): The camera's brightness

"""

self._camera.brightness = value

self._sendCommand(consts.NetworkCommands.SIGNAL_CAMERA_BRIGHTNESS_CHANGED, [value])

With the server-side done, all that is left is the client-side code. The design I went for was to have a PiCameraWrapperClient class which had all the same methods and signals as the PiCameraWrapper class from part 2, but just sat on top of a TCP socket to the server. This allowed for it to be slotted in with the existing UI code with minimal changes and only required some small tweaks to make work.

The constructor takes in an optional hostname for the camera to connect to, but under the hood, it calls a connectToCamera method. This approach lets the user connect and disconnect to multiple cameras without having to create a new instance of the wrapper for each hostname.

class PiCameraWrapperClient(QtCore.QObject):

"""

A Network based Qt aware wrapper around the Pi's camera API

Client side, deals with the Server

Signals:

cameraStatusChanged (PiCameraStatus): Emitted when the camera's status changes

cameraBrightnessChanged (int): Emmited when the camera's brightness is changed

cameraContrastChanged (int): Emmited when the camera's contrast is changed

recordingStarted (): Emitted when the recording is started

recordingStopped (): Emitted when the recording is stopped

recordingSavedStartProcessing(): Emitted when the recording is saved, start to transcode

recordingSaveFinishedProcessing (str): Emitted when the recording is saved, finished transcode. has final filename

recordingSaveErrorProcessing (str): Emitted when the processing of a save file errors out. Has the error text

newPreviewFrame (QImage): Emitted with a new Preview Frame

networkError (str): Emitted when there is a network error and has the friendly string error

cameraError (str): Emitted with any camera error

connectedToCamera (): Emitted when connected to camera

disconnectedFromCamera (): Emitted when the camera is disconnected

"""

cameraStatusChanged = QtCore.Signal(object)

cameraBrightnessChanged = QtCore.Signal(object)

cameraContrastChanged = QtCore.Signal(object)

recordingStarted = QtCore.Signal()

recordingStopped = QtCore.Signal()

recordingSavedStartProcessing = QtCore.Signal()

recordingSaveFinishedProcessing = QtCore.Signal(object)

recordingSaveErrorProcessing = QtCore.Signal(object)

newPreviewFrame = QtCore.Signal(object)

cameraError = QtCore.Signal(object)

networkError = QtCore.Signal(object)

connectedToCamera = QtCore.Signal()

disconnectedFromCamera = QtCore.Signal()

def __init__(self, hostName=None, captureInfo=consts.CaptureInfo.VIDEO_1080p_30):

"""

Constructor

"""

super(PiCameraWrapperClient, self).__init__()

self._connection = QtNetwork.QTcpSocket(self)

self._connection.disconnected.connect(self._handleCameraDisconnected)

self._connection.readyRead.connect(self._handleCameraDataRead)

self._readData = QtCore.QByteArray()

if hostName is not None:

self.connectToCamera(hostName)

def connectToCamera(self, hostName):

"""

connect to a camera

args:

hostName (str): The hostname of the camera to connect to

"""

self._connection.connectToHost(hostName, consts.NetworkCommands.PORT)

if self._connection.waitForConnected(1000):

self.connectedToCamera.emit()

else:

self.networkError.emit(self._connection.errorString())

def disconnectFromCamera(self):

"""

disconnect from the camera

"""

if self._connection.state() == QtNetwork.QTcpSocket.ConnectedState:

self._connection.disconnectFromHost()

In the same pattern used for the server-side, the client will read data when it’s ready, decoding the data when enough is read in, and then parsing that data to be actioned.

def _handleCameraDataRead(self):

"""

Handle reading data off the connection when data is ready to be read

"""

self._readData.append(self._connection.readAll())

while True:

result = consts.NetworkParser.decodeMessage(self._readData)

if result is None:

break

self._parseData(result)

def _parseData(self, data):

"""

Parse the messages sent from the camera

args:

data (consts.NetworkCommands, list of objects): Tuple of consts.NetworkCommands and list

of args

"""

commandIdx, args = data

if commandIdx == consts.NetworkCommands.SIGNAL_NEW_PREVIEW_FRAME:

self.newPreviewFrame.emit(QtGui.QImage.fromData(args[0]))

elif commandIdx == consts.NetworkCommands.SIGNAL_CAMERA_ERROR:

self.cameraError.emit(args[0])

elif commandIdx == consts.NetworkCommands.SIGNAL_CAMERA_STATUS_CHANGED:

self.cameraStatusChanged.emit(args[0])

elif commandIdx == consts.NetworkCommands.SIGNAL_CAMERA_BRIGHTNESS_CHANGED:

self.cameraBrightnessChanged.emit(args[0])

elif commandIdx == consts.NetworkCommands.SIGNAL_CAMERA_CONSTRAST_CHANGED:

self.cameraContrastChanged.emit(args[0])

elif commandIdx == consts.NetworkCommands.SIGNAL_RECORDING_STARTED:

self.recordingStarted.emit()

elif commandIdx == consts.NetworkCommands.SIGNAL_RECORDING_STOPPED:

self.recordingStopped.emit()

elif commandIdx == consts.NetworkCommands.SIGNAL_RECORDING_SAVED_START_PROCESSING:

self.recordingSavedStartProcessing.emit()

elif commandIdx == consts.NetworkCommands.SIGNAL_RECORDING_SAVE_FINISHED_PROCESSING:

self.recordingSaveFinishedProcessing.emit(args[0])

elif commandIdx == consts.NetworkCommands.SIGNAL_RECORDING_SAVE_ERROR_PROCESSING:

self.recordingSaveErrorProcessing.emit(args[0])

The sending of the commands follows the same pattern, with the setAnnotateText and setContrast methods you can see how that would work.

def _sendCommand(self, commandIndex, listOfArgs):

"""

Send a given command to the remote camera

args:

commandIndex (consts.NetworkCommands): The command index of the message, what its for

listOfArgs (list of objects): List of arguments that will be serialised and passed over

"""

if self._connection.state() == QtNetwork.QTcpSocket.ConnectedState:

package = consts.NetworkParser.encodeMessage(commandIndex, listOfArgs)

self._connection.write(package)

if self._connection.waitForBytesWritten() is False:

self.networkError.emit(self._connection.errorString())

def setContrast(self, value):

"""

Set the camera's contrast

args:

value (int): The camera's Contrast

"""

return self._sendCommand(consts.NetworkCommands.SET_CONSTRAST, [value])

def setAnnotateText(self, text=None, colour=None, size=None, frameNumbers=None):

"""

Set the Annotate text for the video and preview

kwargs:

text (str): The string to add. Empty string to clear the annotate

colour (str): String name of the colour background, eg "black"

size (int): The size of the text.

frameNumbers (bool): If the frame count from the camera should be shown or now

"""

self._sendCommand(consts.NetworkCommands.SET_ANNOTATE_TEXT, [text, colour, size, frameNumbers])

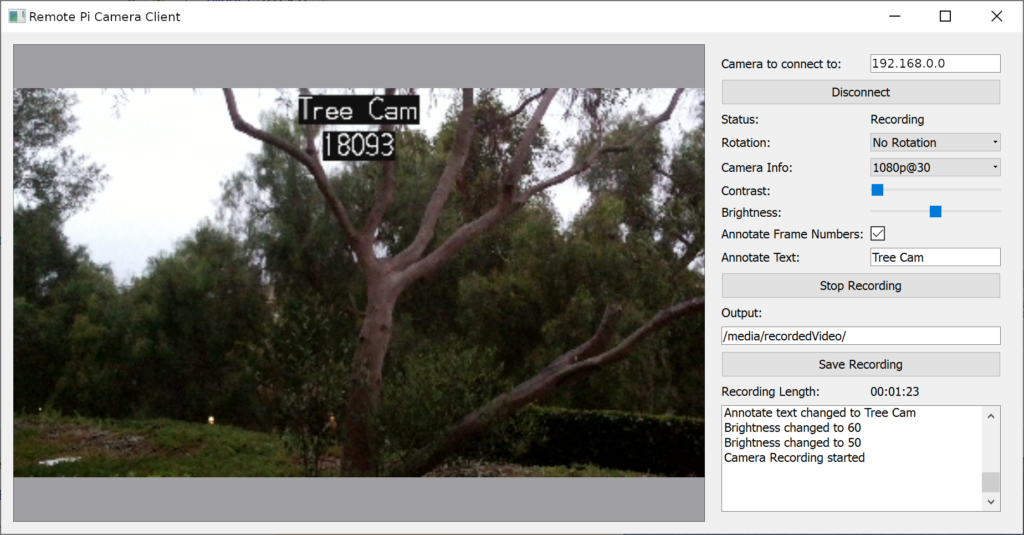

The Client UI needs a small change to accommodate the change to it being network-based, such as a new hostname edit textbox and connect button. As there are new signals on the PiCamerWrapperClient for network events and errors, hooking them into the current logging system is trivial but very effective.

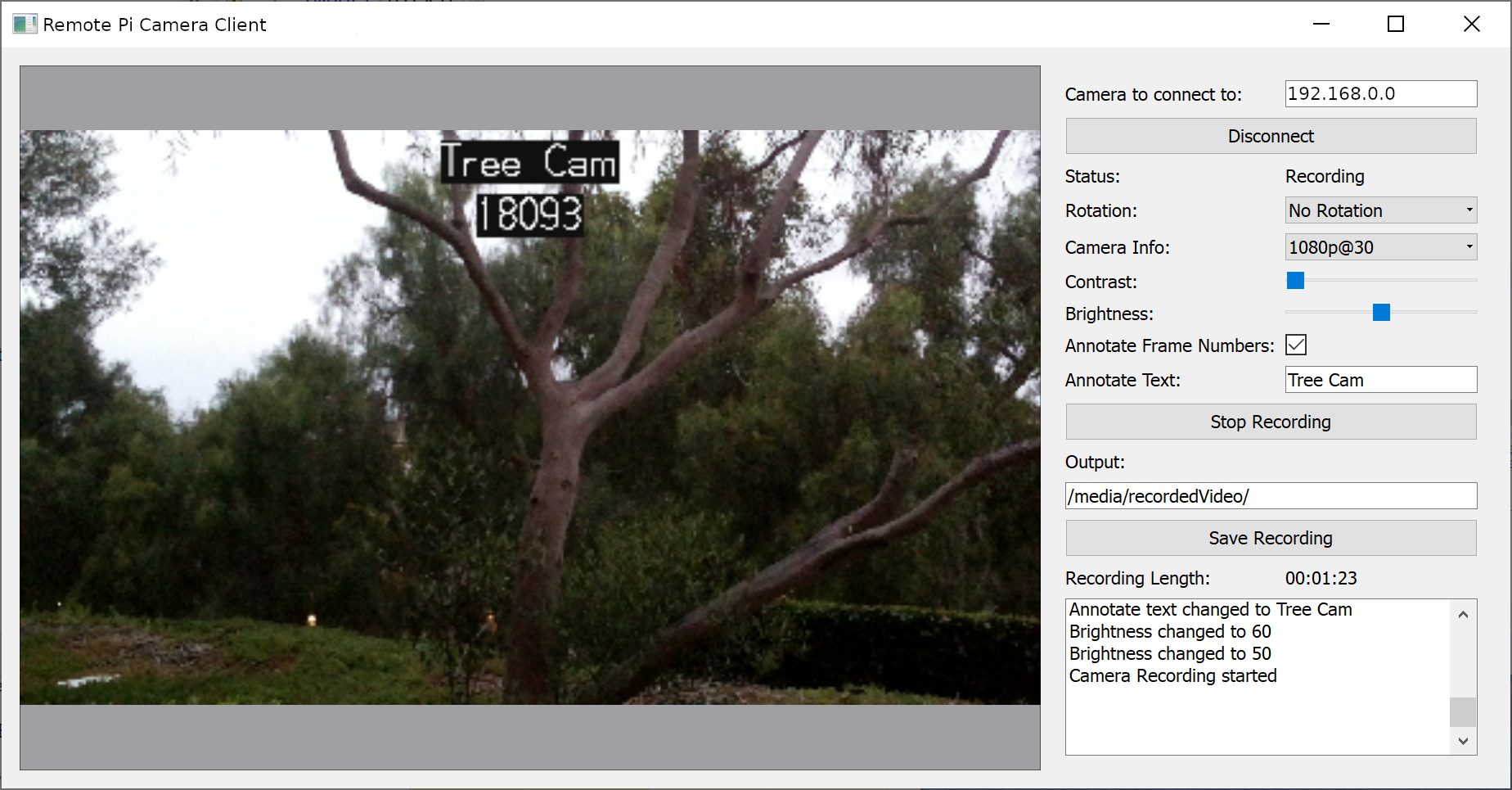

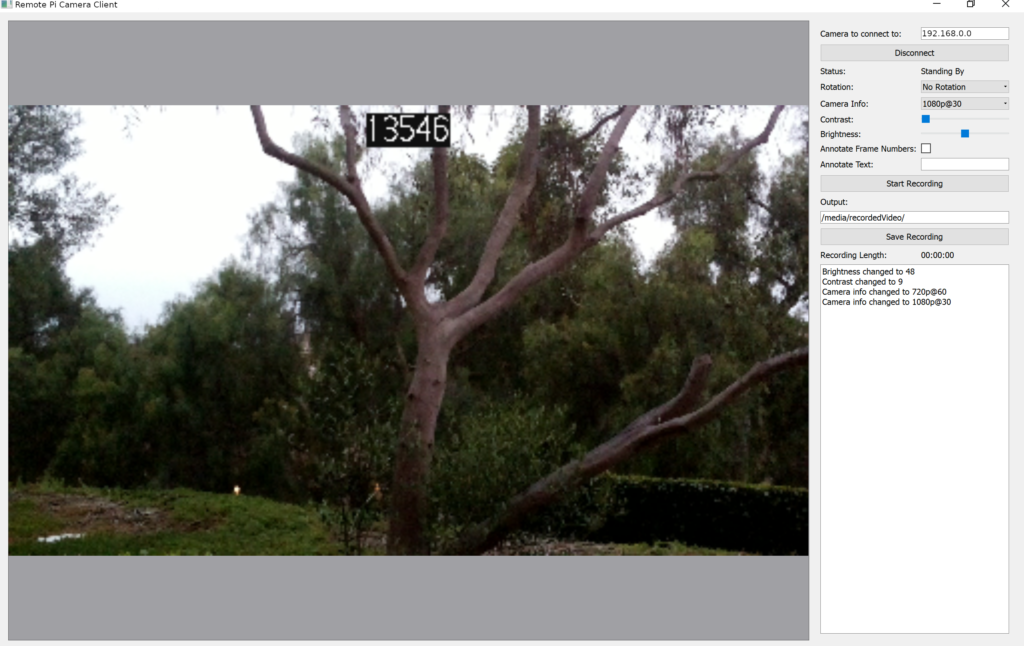

Running up the server on the Raspberry Pi in a terminal and connecting to it from a windows based client shows that it works seamlessly, as it did when it was being run locally.

Everything from the rotation, changing the camera info (the resolution and frame rate), brightness, and the annotated text will work as expected, with the only noticeable issue being that the preview image is reduced in quality.

Recording, saving, and transcoding works exactly as it did locally, and currently is using the same system for the output path, but it could be extended to

As the camera is a server-based design, it’s possible to connect multiple clients to a single camera to view the preview, trigger recording, and set parameters. It’s also possible to use this design to have multiple clients on a single machine, each one connecting to a different camera for multi-camera projects.

That concludes this 3 part series about building a system to remotely camera videos on a Raspberry Pi using Python and Qt. This is a fantastic base and there’s a wide range of projects that will be using this as a base coming soon, so don’t forget to subscribe to make sure you get notified when these projects go live.

– Geoff